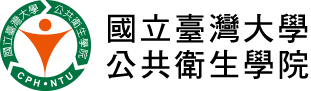

Author: Lung-Wen Antony Chen (陳隆文)

Associate Professor, Department of Environmental and Occupational Health, School of Public Health, University of Nevada Las Vegas

[Editor’s note]: Currently, Prof. Chen, L.-W. Antony is a visiting scholar at the Institute of Environmental and Occupational Health Sciences, College of Public Health, National Taiwan University.

It was July 1998 when I arrived at University of Maryland to study chemical physics at the Institute for Physical Science and Technology. My program is interdisciplinary and consists of faculties from both science and engineering. Because of my background in surface physics I was almost recruited to study thin-film technology, but a conversation with Prof. Russell Dickerson, later becoming my academic advisor, changed my career into air pollution. I was fascinated by the “Good Ozone” and “Bad Ozone” and photochemistry behind their rise and demise. The research of Antarctic ozone hole had just been awarded Nobel Prize in 1995, while summertime smog and haze were on the headlines across much of the U.S. eastern seaboard. PM2.5 emerged as a hot topic in scientific and policy communities; being a new term to me then, funding and job prospects with PM2.5 research appeared good due to its health and climate links.

Compared with ozone, particulate matter (PM) pollution is of multitude complexity resulting from attributes such as size, shape, and chemical composition of these tiny airborne particles. Not long after the establishment of ambient air quality standards for PM2.5 and PM10 as part of the Clean Air Act did U.S. Environmental Protection Agency (EPA) realize the need to measure these attributes consistently in order to assess the particles’ source and toxicity. I was at the right time and place when U.S. EPA kicked off the PM Supersite and related projects around 1999 targeting novel measurement technologies as well as ideas to interpret the data acquired from these measurements. Working with Prof. Russell Dickerson and Bruce Doddridge at University of Maryland and later with Prof. Judith Chow and John Watson at Desert Research Institute on these projects, I devoted myself to PM2.5 carbon speciation and receptor modeling that became my main research areas for the next 25 years.

For any air quality measurements, one cannot overemphasize the importance of quality assurance and quality control (QA/QC). QA dictates measurement protocols to be followed while QC identifies questionable data that may not be reproducible. Low-quality data likely lead to erratic conclusions in exposure assessment, source apportionment, or pollution control evaluation. Black carbon (BC) and organic carbon (OC) are major components of PM2.5 prevalently from burning fossil fuel and biomass, though earlier literature often quantified BC and OC without consistent methods and QA/QC. Even the two national PM2.5 monitoring networks (CSN and IMPROVE) managed by U.S. EPA used different methods for several years, making it challenging to draw a picture about their abundance, trend, and effect. Our work critically assessed the sources of deviation between the two methods, refined the measurement protocol, and recommended a proper way to report the measurement uncertainty. Finally, U.S. EPA unified their BC and OC measurements with this new protocol known as IMPROVE_A for more than 200 sites in 2007. We have further extended the method to measure brown carbon since 2016.

Receptor modeling is a technique for source apportionment, i.e., attributing pollutants to their sources based on the chemical fingerprints and/or time series. U.S. EPA mandated source apportionment studies when states developed their air quality management plan to meet the air quality standards. The availability of rich chemical datasets from the PM Supersites, along with development of new software and algorithms, led to a boom in applying receptor models to analyze the data. However, a lack of proper modeling guidelines made source apportionment results more or less subjective, thus relying on professional experience and judgement. One particular issue we identified after reviewing many of the studies was improper representation of measurement uncertainties in the model, which propagated into spurious results. A modeling guideline ought to emphasize the concept of QA/QC regardless of receptor model and dataset used. Besides standardizing the uncertainty estimates, QA/QC approaches we recommended in the receptor modeling guidelines include reconciling results from different models, obtaining feedbacks from source profiles and emission inventories, and extensive sensitivity tests.

Air quality management framework established in the U.S. focusing on long-term monitoring and emission identification/control proves to work well, as evidenced by fewer exceedances to air quality standards nationally today than when the Supersite project started. This is also the case for other countries, including Taiwan, which have made similar efforts. Air pollution remains a major global health burden according to the World Health Organization (WHO) due to dire conditions in some developing countries, mostly with financial constraints to even collect air quality data. I believe how the U.S. framework can be adapted for these countries, taking the technology innovation and cultural differences into account so that the benefit-cost ratio is maximized, will be an important topic for the next decades. Towards this goal, we initialized a conversation with African stakeholders in 2017 as part of the World Bank Pollution Management-Environmental Health program.

Although air quality has come a long way in developed countries, I also see new challenges emerge with the first being disparity in exposure. Studies have shown that air pollution burden falls disproportionately on people of lower socioeconomic status, particulately those living near or working at industrial zones, creating environmental justice concerns. The disparity is often off the radar of regulatory air quality monitoring stations that are sparsely located. Integrating these stations with numerous low-cost, personalized sensors is potentially a solution for identifying the at-risk subpopulations. To make it work, however, there are obstacles to be overcome, in particular QA/QC of the sensor data collected by citizen scientists and interpreting the data with higher and more variable uncertainties.

Another challenge impacts receptor model-based source apportionment. As pollution controls have cut down major sources including motor vehicle exhausts, coal-fired power plants, and biomass burning, the more and more stringent air quality standards require us to address sources that were previously ignored. Specific markers for these sources, however, are often not available. One such example is tire wear. Tire wear may rival tailpipe emission in modern vehicles and even be a dominant PM2.5 source from electric vehicles, though few receptor modeling studies distinguished its contributions to PM2.5. Efforts need to be made to include known tire wear markers, such as oleamide, benzothiazole, and rubber derivates, in PM2.5 measurements and receptor modeling. Microplastics, biological particles, brown carbon, and secondary organic aerosol are among sources of this increasing concern. Furthermore, for many jurisdictions, transmission of pollutants from outside plays a more important role today than ever. Ideas to separate local and outside contributions using receptor models, often supplemented with wind or other meteorological analysis, have been presented in the literature. They should be evaluated extensively for a timely update to the receptor modeling guidelines.

Looking back, I appreciate the opportunity to work on air pollution research and witness appreciable air quality improvements under well-informed policies and management, particularly in the U.S. Despite many accomplishments made, health and climate concerns continue to demand better air quality. In fact, WHO just tightened its annual PM2.5 guidelines from 10 µg m-3 to 5 µg m-3 in September 2021, leaving more than 90% of the countries worldwide at risk of poor air quality. There are ample opportunities for those who are interested in entering this field, in both developing and developed counties, performing either fundamental or applied research. I look forward to witnessing the next revolution in this field and being part of it.

Pic 1. Working on the carbon speciation method in a laboratory of University of Rostock, Germany.

Pic 2. Demonstrating low-cost air quality sensors at Lagos State Ministry of the Environment, Nigeria.